AI + Web3: A Torre e o Quadrado

TL;DR:

- Os projetos Web3 de conceito de IA tornaram-se alvos de investimento atraentes nos mercados primário e secundário.

- As oportunidades para a Web3 na indústria de IA residem em: usar incentivos distribuídos para coordenar o potencial de oferta na longa cauda - através de dados, armazenamento e computação; entretanto, estabelecendo um modelo de código aberto e um mercado descentralizado para Agentes de IA.

- A IA desempenha um papel fundamental na indústria Web3, principalmente em finanças on-chain (pagamentos de criptomoedas, negociação, análise de dados) e assistência ao desenvolvimento.

- A utilidade do AI+Web3 reside na complementaridade dos dois: espera-se que o Web3 contrarie a centralização do AI, e espera-se que o AI ajude o Web3 a libertar-se do confinamento.

Introdução

Nos últimos dois anos, o desenvolvimento da IA tem sido acelerado, como um efeito borboleta instigado pelo ChatGPT, não só abrindo um novo mundo de inteligência artificial generativa, mas também agitando uma tendência no distante Web3.

Com a bênção do conceito de IA, o financiamento do mercado de criptomoedas foi significativamente impulsionado em comparação com a desaceleração. Segundo estatísticas da mídia, apenas no primeiro semestre de 2024, um total de 64 projetos Web3+AI completaram o financiamento, e o sistema operacional baseado em IA Zyber365 alcançou o maior montante de financiamento de 100 milhões de dólares na rodada Série A.

O mercado secundário é mais próspero, e os dados do site de agregação criptografada Coingecko mostram que em pouco mais de um ano, o valor de mercado total da faixa de IA atingiu $485 bilhões, com um volume de negociação de 24 horas de quase $86 bilhões; os benefícios óbvios trazidos pelo progresso da tecnologia de IA mainstream, após o lançamento do modelo de texto para vídeo Sora da OpenAI, o preço médio do setor de IA subiu 151%; o efeito de IA também irradiou para um dos setores de ouro de criptomoeda: o primeiro conceito de MemeCoin de Agente de IA - GOAT rapidamente se tornou popular e atingiu uma avaliação de $1.4 bilhão, iniciando com sucesso a febre do Meme de IA.

As pesquisas e tópicos sobre IA+Web3 estão igualmente em alta. Desde a IA+Depin até a IA Memecoin e até a atual IA Agente e IA DAO, a emoção FOMO já ficou para trás em relação à velocidade da nova rotação narrativa.

IA+Web3, esta combinação de termos cheia de dinheiro quente, tendências e fantasias futuras, é inevitavelmente vista como um casamento arranjado pelo capital. Parece-nos difícil distinguir se é o terreno dos especuladores ou a véspera do amanhecer sob este manto magnífico.

Para responder a esta pergunta, uma consideração-chave para ambas as partes é se a outra se tornará melhor? Podem beneficiar dos padrões um do outro? Neste artigo, também tentamos examinar esta situação da perspetiva de estar sobre os ombros dos predecessores: Como pode o Web3 desempenhar um papel em vários aspetos do stack de tecnologia de IA, e que nova vitalidade pode a IA trazer ao Web3?

Que oportunidades tem a Web3 sob a pilha de IA?

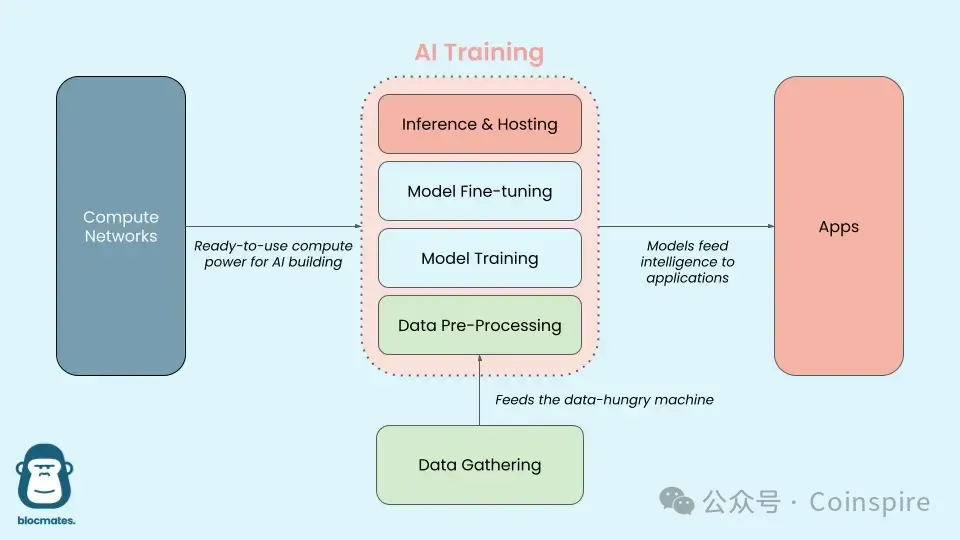

Antes de nos aprofundarmos neste tópico, precisamos de compreender o conjunto técnico dos grandes modelos de IA:

Fonte da imagem: Delphi Digital

Em termos mais simples, o "grande modelo" é como o cérebro humano. Nas primeiras fases, este cérebro é como um recém-nascido que acabou de chegar ao mundo, precisando observar e absorver vastas quantidades de informações externas para entender o mundo. Esta é a fase de "coleta" de dados; uma vez que os computadores não possuem múltiplos sentidos como os humanos, antes do treino, as vastas quantidades de informações externas não anotadas em grande escala precisam ser "pré-processadas" para serem transformadas em um formato que os computadores possam entender e usar.

Após a introdução de dados, a IA constrói um modelo com a capacidade de compreender e prever através do 'treino', que pode ser visto como o processo de um bebé a compreender e a aprender gradualmente sobre o mundo externo. Os parâmetros do modelo são como a capacidade linguística que um bebé ajusta continuamente durante o processo de aprendizagem. Quando o conteúdo de aprendizagem começa a especializar-se, ou quando recebe feedback ao interagir com as pessoas e faz correções, entra na fase de 'afinação' de modelos grandes.

À medida que as crianças crescem e aprendem a falar, podem compreender significados e expressar seus sentimentos e pensamentos em novas conversas, o que é semelhante à 'inferência' de modelos AI grandes. O modelo pode prever e analisar novas entradas de idioma e texto. Os bebês expressam seus sentimentos, descrevem objetos e resolvem vários problemas através das habilidades linguísticas, o que também é semelhante à aplicação de modelos AI grandes em várias tarefas específicas durante a fase de inferência após completar o treinamento, como classificação de imagem, reconhecimento de fala, etc.

Enquanto o Agente de IA está mais próximo da próxima forma de modelos grandes - sendo capaz de executar tarefas de forma independente e prosseguir objetivos complexos, não apenas possuindo habilidade de pensar, mas também sendo capaz de memorizar, planejar e interagir com o mundo usando ferramentas.

Atualmente, ao abordar os pontos problemáticos da IA em várias pilhas, a Web3 formou inicialmente um ecossistema multissetorial e interconectado, cobrindo várias fases dos processos de modelos de IA.

Primeiro, Camada Base: Airbnb do poder de computação e dados

Potência de computação

Atualmente, um dos custos mais elevados da IA é o poder de computação e energia necessários para treinar modelos e modelos de inferência.

Um exemplo é que o LLAMA3 da Meta requer 16.000 H100GPUs produzidas pela NVIDIA (uma unidade de processamento gráfico de ponta projetada especificamente para cargas de trabalho de inteligência artificial e computação de alto desempenho) para completar o treinamento em 30 dias. A versão de 80GB deste último tem um preço entre $30.000 e $40.000, exigindo um investimento em hardware de $4-7 bilhões (GPU + chips de rede). Além disso, o treinamento mensal consome 16 bilhões de quilowatts-hora, com uma despesa de energia de quase $20 milhões por mês.

Para a descompressão do poder de computação de IA, é também o campo mais antigo onde o Web3 se intersecta com a IA - DePin (rede de infraestrutura física descentralizada). Atualmente, o site de dados DePin Ninja exibiu mais de 1400 projetos, incluindo representantes de projetos de partilha de poder de computação de GPU como io.net, Aethir, Akash, Render Network, e assim por diante.

A lógica principal é: A plataforma permite que indivíduos ou entidades com recursos GPU inativos contribuam com sua potência de computação de forma descentralizada, sem permissão, aumentando a utilização de recursos GPU subutilizados por meio de um mercado online semelhante ao Uber ou Airbnb para compradores e vendedores, permitindo que os usuários finais obtenham recursos de computação mais econômicos e eficientes; ao mesmo tempo, o mecanismo de staking também garante que, se houver violações dos mecanismos de controle de qualidade ou interrupções de rede, os provedores de recursos enfrentarão penalidades correspondentes.

Suas características são:

- Agregação de recursos de GPU inativos: Os fornecedores são principalmente pequenos e médios centros de dados independentes de terceiros, recursos excedentes de energia computacional de operadores como minas criptografadas, e hardware de mineração com mecanismos de consenso PoS, como mineiros de FileCoin e ETH. Atualmente, também existem projetos dedicados ao lançamento de dispositivos com barreiras de entrada mais baixas, como exolab que utiliza dispositivos locais como MacBook, iPhone, iPad para estabelecer uma rede de energia computacional para executar inferências de modelos em larga escala.

- Enfrentando o mercado de long tail de potência de computação de IA: a. “Em termos de tecnologia,” o mercado de potência de computação descentralizada é mais adequado para etapas de raciocínio. O treino depende mais da capacidade de processamento de dados trazida pelo super-grande cluster de GPU em escala, enquanto o raciocínio é relativamente baixo em desempenho de computação de GPU, como o Aethir focando em trabalhos de renderização de baixa latência e aplicações de inferência de IA. b. “Em termos de demanda,” os demandantes de potência de computação pequenos e médios não treinarão individualmente seus próprios modelos grandes, mas apenas escolherão otimizar e ajustar em torno de alguns modelos principais, e esses cenários são naturalmente adequados para recursos distribuídos de potência de computação ociosa.

- Propriedade descentralizada: A importância tecnológica da blockchain é que os proprietários de recursos mantêm sempre o controlo sobre os seus recursos, ajustam-se flexivelmente de acordo com a procura e lucram ao mesmo tempo.

Dados

Os dados são a base da IA. Sem dados, a computação é inútil, e a relação entre dados e modelos é como o provérbio 'Lixo entra, Lixo sai'. A quantidade e qualidade dos dados determinam a qualidade de saída do modelo final. Para o treinamento dos modelos de IA atuais, os dados determinam a capacidade de linguagem, capacidade de compreensão e até mesmo os valores e desempenho humanizado do modelo. Atualmente, o dilema da demanda de dados da IA foca principalmente nos seguintes quatro aspectos:

- Fome de dados: o treino de modelos de IA depende fortemente de grandes quantidades de dados de entrada. As informações públicas mostram que o número de parâmetros para treinar o GPT-4 pela OpenAI atingiu o nível dos triliões.

- Qualidade dos dados: Com a combinação de IA e várias indústrias, novos requisitos foram propostos para a atualidade, diversidade, profissionalismo dos dados específicos da indústria e a ingestão de fontes de dados emergentes, como o sentimento nas redes sociais.

- Questões de Privacidade e Conformidade: Atualmente, vários países e empresas estão gradualmente percebendo a importância de conjuntos de dados de alta qualidade e estão impondo restrições à coleta de dados.

- Altos custos de processamento de dados: grandes quantidades de dados, processamento complexo. As informações públicas mostram que mais de 30% dos custos de P&D das empresas de IA são utilizados para coleta e processamento de dados básicos.

Atualmente, a solução web3 reflete-se nos seguintes quatro aspetos:

1. Coleta de Dados: Os dados do mundo real disponíveis gratuitamente para raspagem estão rapidamente se esgotando, e as despesas das empresas de IA com dados têm aumentado ano após ano. No entanto, ao mesmo tempo, esses gastos não foram repassados aos verdadeiros contribuintes dos dados; as plataformas têm desfrutado inteiramente da criação de valor trazida pelos dados, como o Reddit gerando um total de $203 milhões em receitas por meio de acordos de licenciamento de dados com empresas de IA.

A visão da Web3 é permitir aos utilizadores que contribuem verdadeiramente para participarem também na criação de valor trazida pelos dados e obter dados mais pessoais e valiosos dos utilizadores de forma eficaz através de redes distribuídas e mecanismos de incentivo.

- Como a Grass é uma camada de dados e rede descentralizada, os utilizadores podem capturar dados em tempo real de todo o Internet ao executar nós da Grass, contribuir com largura de banda inativa e retransmitir tráfego, e receber recompensas em tokens;

- Vana introduz um conceito único de Pool de Liquidez de Dados (DLP), onde os utilizadores podem carregar os seus dados privados (como registos de compras, hábitos de navegação, atividades em redes sociais, etc.) para um DLP específico e escolher se autorizam seletivamente estes dados para uso por terceiros específicos;

- Em PublicAI, os usuários podem usar #AI ou #Web3 como tags de classificação em X@PublicAIA recolha de dados pode ser alcançada.

2. Pré-processamento de dados: No processamento de dados de IA, como os dados coletados geralmente são ruidosos e contêm erros, é necessário limpá-los e convertê-los em um formato utilizável antes de treinar o modelo, envolvendo as tarefas repetitivas de padronização, filtragem e tratamento de valores em falta. Esta fase é um dos poucos processos manuais na indústria de IA, que deu origem à indústria de anotadores de dados. À medida que os requisitos do modelo para a qualidade dos dados aumentam, o limiar para os anotadores de dados também aumenta. Esta tarefa naturalmente se presta ao mecanismo de incentivo descentralizado do Web3.

- Atualmente, Grass e OpenLayer estão ambos a considerar adicionar a anotação de dados como um passo chave.

- A Synesis propôs o conceito de 'Train2earn', enfatizando a qualidade dos dados, onde os utilizadores podem ser recompensados por fornecerem dados anotados, comentários ou outras formas de contribuição.

- O projeto de rotulagem de dados Sapien gamifica as tarefas de rotulagem e permite aos utilizadores apostar pontos para ganhar mais pontos.

3. Privacidade e Segurança de Dados: É necessário esclarecer que a privacidade e a segurança de dados são dois conceitos diferentes. A privacidade de dados envolve o tratamento de dados sensíveis, enquanto a segurança de dados protege as informações dos dados contra acessos não autorizados, destruição e roubo. Como resultado, as vantagens e potenciais cenários de aplicação das tecnologias de privacidade da Web3 refletem-se em dois aspetos: (1) treino de dados sensíveis; (2) colaboração de dados: vários proprietários de dados podem participar no treino de IA juntos sem partilhar os seus dados originais.

As tecnologias comuns de privacidade no Web3 incluem atualmente:

- Ambiente de Execução Confiável (TEE), como o Super Protocolo;

- Cifragem Completamente Homomórfica (FHE), como BasedAI, Fhenix.io, ou Inco Network;

- A tecnologia de conhecimento zero (zk), como o Protocolo Reclaim que utiliza a tecnologia zkTLS, gera provas de conhecimento zero do tráfego HTTPS, permitindo aos utilizadores importar de forma segura atividades, reputação e dados de identidade de websites externos sem expor informações sensíveis.

No entanto, o campo ainda está em estágios iniciais, com a maioria dos projetos ainda em exploração. Atualmente, um dos dilemas é que os custos de computação são muito altos, com alguns exemplos sendo:

- O framework zkML EZKL demora cerca de 80 minutos a gerar uma prova de um modelo 1M-nanoGPT.

- De acordo com os dados da Modulus Labs, o overhead do zkML é mais de 1000 vezes maior do que a computação pura.

4. Armazenamento de Dados: Após obter os dados, é necessário ter um local para armazenar os dados na cadeia e usar o LLM gerado pelos dados. Com a disponibilidade de dados (DA) como questão central, antes da atualização do Ethereum Danksharding, a sua taxa de transferência era de 0,08MB. Ao mesmo tempo, o treino e a inferência em tempo real de modelos de IA geralmente requerem uma taxa de transferência de dados de 50 a 100GB por segundo. Esta diferença de ordem de grandeza torna as soluções existentes na cadeia inadequadas ao enfrentar 'aplicações de IA intensivas em recursos'.

- 0g.AI é um projeto representativo nesta categoria. É uma solução de armazenamento centralizada projetada para requisitos de AI de alta performance, com características chave incluindo alta performance e escalabilidade, suportando o carregamento e descarregamento rápido de conjuntos de dados em larga escala através de tecnologias avançadas de fragmentação e codificação de erros, com velocidades de transferência de dados aproximando os 5GB por segundo.

Dois, Middleware: Treino e Inferência do Modelo

Mercado descentralizado de modelo de código aberto

O debate sobre se os modelos de IA devem ser de código aberto ou de código fechado nunca cessou. A inovação coletiva trazida pelo código aberto é uma vantagem que os modelos de código fechado não podem igualar. No entanto, sob a premissa de não haver modelo de lucro, como é que os modelos de código aberto podem aumentar a motivação dos desenvolvedores? Este é um caminho que vale a pena ponderar. O fundador da Baidu, Robin Li, afirmou em abril deste ano: "Os modelos de código aberto vão ficar para trás cada vez mais."

Nesse sentido, a Web3 propõe a possibilidade de um mercado de modelo descentralizado de código aberto, ou seja, tokenizar o próprio modelo, reservar uma certa proporção de tokens para a equipe e direcionar parte da renda futura do modelo para os detentores de tokens.

- O protocolo Bittensor estabelece um modelo de código aberto de um mercado P2P, consistindo em dezenas de 'sub-redes', onde os fornecedores de recursos (computação, coleta/armazenamento de dados, talento em aprendizado de máquina) competem entre si para atender aos objetivos de proprietários de sub-redes específicas. As sub-redes podem interagir e aprender umas com as outras, alcançando assim uma maior inteligência. As recompensas são distribuídas por votação da comunidade e posteriormente alocadas entre as sub-redes com base no desempenho competitivo.

- ORA introduz o conceito de Oferta de Modelo Inicial (IMO), tokenizando modelos de IA para compra, venda e desenvolvimento em redes descentralizadas.

- Sentient, uma plataforma AGI descentralizada, incentiva as pessoas a colaborar, construir, replicar e estender modelos de IA, recompensando os contribuidores.

- Spectral Nova foca na criação e aplicação de modelos de IA e ML.

Inferência Verificável

Para o dilema da 'caixa preta' no processo de raciocínio da IA, a solução padrão Web3 é ter múltiplos validadores repetindo a mesma operação e comparando os resultados. No entanto, devido à atual escassez de 'chips Nvidia' de alta qualidade, o desafio óbvio enfrentado por esta abordagem é o alto custo do raciocínio da IA.

Uma solução mais promissora é realizar provas ZK de cálculos de inferência de IA fora da cadeia, onde um provador pode provar a outro verificador que uma determinada declaração é verdadeira sem revelar nenhuma informação adicional além da declaração ser verdadeira, permitindo a verificação sem permissão de cálculos de modelos de IA na cadeia. Isso requer provar na cadeia de forma criptografada que os cálculos fora da cadeia foram concluídos corretamente (por exemplo, o conjunto de dados não foi adulterado), garantindo ao mesmo tempo que todos os dados permaneçam confidenciais.

As principais vantagens incluem:

- Escalabilidade: As provas de conhecimento zero podem confirmar rapidamente um grande número de cálculos fora da cadeia. Mesmo à medida que o número de transações aumenta, uma única prova de conhecimento zero pode verificar todas as transações.

- Proteção da privacidade: As informações detalhadas sobre os dados e os modelos de IA são mantidas confidenciais, enquanto todas as partes podem verificar que os dados e os modelos não foram adulterados.

- Não é necessário confiar: Pode confirmar o cálculo sem depender de partes centralizadas.

- Integração Web2: Por definição, o Web2 é integrado off-chain, o que significa que o raciocínio verificável pode ajudar a trazer seus conjuntos de dados e cálculos de IA para a cadeia. Isso ajuda a melhorar a adoção do Web3.

Atualmente, a tecnologia verificável da Web3 para raciocínio verificável é a seguinte:

- ZKML: Combinando a prova de conhecimento zero com aprendizado de máquina para garantir a privacidade e confidencialidade de dados e modelos, permitindo a computação verificável sem revelar certas propriedades subjacentes. A Modulus Labs lançou um provador ZK com base em ZKML para construir IA, a fim de verificar efetivamente se os provedores de IA na cadeia manipulam corretamente os algoritmos executados, mas atualmente os clientes são principalmente DApps na cadeia.

- opML: Usando o princípio de agregação otimista, verificando o momento da ocorrência da disputa, melhorando a escalabilidade e eficiência dos cálculos de ML, neste modelo, apenas uma pequena parte dos resultados gerados pelo 'validador' precisa ser verificada, mas a redução do custo econômico é definida como suficientemente alta para aumentar o custo de trapaça pelos validadores e economizar cálculos redundantes.

- TeeML: Usar ambiente de execução confiável para executar de forma segura cálculos de ML, protegendo dados e modelos contra adulteração e acesso não autorizado.

Três, Camada de Aplicação: Agente de IA

O desenvolvimento atual da IA já mostrou uma mudança de foco das capacidades do modelo para o panorama dos Agentes de IA. Empresas de tecnologia como a OpenAI, o unicórnio da IA Anthropic, a Microsoft, etc., estão voltando-se para o desenvolvimento de Agentes de IA, tentando romper o atual patamar técnico do LLM.

OpenAI define Agente de IA como um sistema impulsionado por LLM como seu cérebro, com a capacidade de compreender autonomamente a perceção, planear, lembrar e usar ferramentas, e pode completar automaticamente tarefas complexas. Quando a IA transita de ser uma ferramenta usada para um sujeito que pode usar ferramentas, torna-se um Agente de IA. Esta é também a razão pela qual os Agentes de IA podem tornar-se os assistentes inteligentes mais ideais para os humanos.

O que pode a Web3 trazer para o Agente?

1. Descentralização

A descentralização da Web3 pode tornar o sistema do Gate mais descentralizado e autônomo. Mecanismos de incentivo e penalização para validadores e delegados podem promover a democratização do sistema do Gate, com GaiaNet, Theoriq e HajimeAI tentando fazê-lo.

2, Início Frio

O desenvolvimento e iteração do Agente de IA muitas vezes requerem um grande apoio financeiro, e o Web3 pode ajudar projetos promissores de Agente de IA a obter financiamento em fases iniciais e um começo a frio.

- A Virtual Protocol lança a plataforma de criação de Agente IA e emissão de tokens fun.virtuals, onde qualquer utilizador pode implementar Agentes IA com um único clique e alcançar uma distribuição justa de 100% dos tokens de Agente IA.

- Spectral propôs um conceito de produto que suporta a emissão de ativos de Agente de IA na cadeia: emitindo tokens através de IAO (Oferta Inicial de Agente), os Agentes de IA podem obter fundos diretamente de investidores, ao mesmo tempo que se tornam membros da governança da DAO, proporcionando aos investidores a oportunidade de participar no desenvolvimento do projeto e partilhar os lucros futuros.

Como é que a IA capacita o Web3?

O impacto da IA nos projetos Web3 é óbvio, pois beneficia a tecnologia blockchain otimizando as operações on-chain (como execução de contratos inteligentes, otimização de liquidez e decisões de governança impulsionadas por IA). Ao mesmo tempo, também pode fornecer insights melhores baseados em dados, aprimorar a segurança on-chain e estabelecer as bases para novas aplicações baseadas em Web3.

Um, IA e finanças on-chain

IA e Criptoeconomia

Em 31 de agosto, o CEO da Coinbase, Brian Armstrong, anunciou a primeira transação criptografada de IA para IA na rede Base, afirmando que Agentes de IA agora podem transacionar com humanos, comerciantes ou outras IAs na Base usando USD, com transações instantâneas, globais e gratuitas.

Para além dos pagamentos, a Luna do Virtuals Protocol demonstrou pela primeira vez como os Agentes de IA executam autonomamente transações on-chain, atraindo atenção e posicionando os Agentes de IA como entidades inteligentes capazes de perceber o ambiente, tomar decisões e agir, sendo assim vistos como o futuro das finanças on-chain. Atualmente, os cenários potenciais para os Agentes de IA são os seguintes:

1. Recolha e previsão de informações: Ajudar os investidores a recolher anúncios de trocas, informações públicas do projeto, emoções de pânico, riscos de opinião pública, etc., analisar e avaliar os fundamentos do ativo, condições de mercado em tempo real e prever tendências e riscos.

2. Gestão de Ativos: Fornecer aos utilizadores alvos de investimento adequados, otimizar a alocação de ativos e executar automaticamente negociações.

3. Experiência financeira: Ajudar os investidores a escolher o método de negociação mais rápido on-chain, automatizar operações manuais como transações cross-chain e ajustar taxas de gás, reduzir o limiar e o custo das atividades financeiras on-chain.

Imagine este cenário: instrua o Agente de IA da seguinte forma, "Tenho 1000USDT, por favor ajude-me a encontrar a combinação de maior rendimento com um período de bloqueio de no máximo uma semana." O Agente de IA fornecerá o seguinte conselho: "Sugiro uma alocação inicial de 50% em A, 20% em B, 20% em X e 10% em Y. Vou monitorizar as taxas de juro e observar alterações nos seus níveis de risco, e reequilibrar quando necessário." Além disso, procurar potenciais projetos de airdrop e sinais populares da comunidade de projetos Memecoin são todas ações futuras possíveis para o Agente de IA.

Fonte da imagem: Biconomy

Atualmente, as carteiras de AI Agent Bitte e o protocolo de interação de AI Wayfinder estão fazendo tais tentativas. Todos estão tentando acessar a API de modelo da OpenAI, permitindo que os usuários comandem agentes para completar várias operações on-chain em uma interface de janela de chat semelhante ao ChatGPT. Por exemplo, o primeiro protótipo lançado pela WayFinder em abril deste ano demonstrou quatro operações básicas: swap, send, bridge e stake nas mainnets de Base, Polygon e Ethereum.

Atualmente, a plataforma descentralizada Agent Morpheus também suporta o desenvolvimento de tais Agents, como demonstrado pela Biconomy, mostrando um processo onde as permissões da carteira não são necessárias para autorizar o Agente de IA a trocar ETH por USDC.

IA e segurança de transações on-chain

No mundo Web3, a segurança das transações on-chain é crucial. A tecnologia de IA pode ser usada para aumentar a segurança e proteção da privacidade das transações on-chain, com cenários potenciais incluindo:

Monitorização de negociação: A tecnologia de dados em tempo real monitora atividades de negociação anormais, fornecendo uma infraestrutura de alerta em tempo real para utilizadores e plataformas.

Análise de risco: Ajude a plataforma a analisar os dados de comportamento de negociação do cliente e avaliar seu nível de risco.

Por exemplo, a plataforma de segurança Web3 SeQure utiliza IA para detetar e prevenir ataques maliciosos, comportamentos fraudulentos e fugas de dados, e fornece mecanismos de monitorização e alerta em tempo real para garantir a segurança e estabilidade das transações on-chain. Ferramentas de segurança semelhantes incluem o Sentinel alimentado por IA.

Segundo, IA e infraestrutura on-chain

AI e dados on-chain

A tecnologia de IA desempenha um papel importante na coleta e análise de dados on-chain, tais como:

- Web3 Analytics: uma plataforma de análise baseada em IA que utiliza algoritmos de aprendizagem automática e de mineração de dados para recolher, processar e analisar dados on-chain.

- MinMax AI: Ele fornece ferramentas de análise de dados on-chain baseadas em IA para ajudar os usuários a descobrir oportunidades de mercado potenciais e tendências.

- Kaito: Plataforma de pesquisa Web3 baseada no motor de busca LLM.

- Seguinte: Integrado com ChatGPT, ele coleta e integra informações relevantes dispersas em diferentes sites e plataformas comunitárias para apresentação.

- Outro cenário de aplicação é o oráculo, a IA pode obter preços de várias fontes para fornecer dados de preços precisos. Por exemplo, a Upshot usa IA para avaliar os preços voláteis dos NFTs, fornecendo um erro percentual de 3-10% através de mais de cem milhões de avaliações por hora.

IA e Desenvolvimento&Auditoria

Recentemente, um editor de código AI da Web2, Cursor, tem atraído muita atenção na comunidade de desenvolvedores. Na sua plataforma, os utilizadores só precisam de descrever em linguagem natural, e o Cursor pode gerar automaticamente o código HTML, CSS e JavaScript correspondente, simplificando bastante o processo de desenvolvimento de software. Esta lógica também se aplica à melhoria da eficiência do desenvolvimento Web3.

Atualmente, implantar contratos inteligentes e DApps em cadeias públicas geralmente requer seguir idiomas de desenvolvimento exclusivos como Solidity, Rust, Move e assim por diante. A visão de novos idiomas de desenvolvimento é expandir o espaço de design de blockchains descentralizados, tornando-o mais adequado para o desenvolvimento de DApp. No entanto, dada a significativa escassez de desenvolvedores Web3, a educação de desenvolvedores sempre foi uma questão mais desafiadora.

Atualmente, a IA na assistência ao desenvolvimento Web3 pode ser imaginada em cenários que incluem: geração automática de código, verificação e teste de contratos inteligentes, implantação e manutenção de DApps, conclusão inteligente de código, resposta de diálogo de IA a questões difíceis de desenvolvimento, etc. Com a assistência da IA, não só ajuda a melhorar a eficiência e precisão do desenvolvimento, mas também reduz o limiar de programação, permitindo que não programadores transformem suas ideias em aplicações práticas, trazendo nova vitalidade para o desenvolvimento da tecnologia descentralizada.

Atualmente, o mais chamativo é uma plataforma de token de lançamento com um clique, como o Clanker, um 'Token Bot' impulsionado por IA projetado para implantação rápida de token DIY. Basta marcar o Clanker nos clientes do protocolo SocialFi Farcaster como Warpcast ou Supercast, dizer-lhe a sua ideia de token, e ele lançará o token para si na cadeia pública Base.

Existem também plataformas de desenvolvimento de contratos, como o Spectral, que fornecem funções de geração e implementação com um clique para contratos inteligentes, a fim de baixar o limiar do desenvolvimento Web3, permitindo até mesmo aos utilizadores novatos compilar e implementar contratos inteligentes.

Em termos de auditoria, a plataforma de auditoria Web3 Fuzzland usa IA para ajudar os auditores a verificar vulnerabilidades no código, fornecendo explicações em linguagem natural para auxiliar os profissionais de auditoria. Fuzzland também usa IA para fornecer explicações em linguagem natural para especificações formais e código de contrato, bem como algum código de amostra para ajudar os desenvolvedores a entender possíveis problemas no código.

Três, IA e a Nova Narrativa Web3

A ascensão da IA generativa traz novas possibilidades para a nova narrativa da Web3.

NFT: A IA injeta criatividade nos NFTs generativos. Através da tecnologia de IA, várias obras de arte e personagens únicos e diversos podem ser gerados. Estes NFTs generativos podem tornar-se personagens, adereços ou elementos de cena em jogos, mundos virtuais ou metaversos, como o Bicasso sob a Binance, onde os utilizadores podem gerar NFTs ao carregar imagens e introduzir palavras-chave para a computação de IA. Projetos semelhantes incluem Solvo, Nicho, IgmnAI e CharacterGPT.

GameFi: Com a geração de linguagem natural, geração de imagem e capacidades inteligentes de NPC em torno de IA, espera-se que o GameFi melhore a eficiência e inovação na produção de conteúdo de jogos. Por exemplo, o primeiro jogo de corrente AI Hero da Binaryx permite aos jogadores explorar diferentes opções de enredo através da aleatoriedade da IA; da mesma forma, há o jogo de companheiro virtual Sleepless AI, onde os jogadores podem desbloquear jogabilidade personalizada através de diferentes interações baseadas em AIGC e LLM.

DAO: Atualmente, também se prevê que a IA seja aplicada aos DAOs, ajudando a rastrear interações da comunidade, registar contribuições, recompensar os membros mais contribuintes, voto por procuração, e assim por diante. Por exemplo, a ai16z usa um Agente de IA para reunir informações de mercado on-chain e off-chain, analisar o consenso da comunidade e tomar decisões de investimento em combinação com sugestões dos membros do DAO.

O significado da integração AI+Web3: Torre e Praça

No coração de Florença, Itália, encontra-se a praça central, o local de encontro político mais importante para os habitantes locais e turistas. Aqui se ergue uma torre da câmara municipal com 95 metros de altura, criando um efeito estético dramático com a praça, inspirando o professor de história da Universidade de Harvard, Neil Ferguson, a explorar a história mundial das redes e hierarquias em seu livro 'Square and Tower', mostrando o fluxo e refluxo dos dois ao longo do tempo.

Esta excelente metáfora não está fora de contexto quando aplicada à relação entre a IA e a Web3 hoje. Ao analisar a relação histórica de longo prazo e não linear entre os dois, pode-se ver que os quadrados têm mais probabilidade de produzir coisas novas e criativas do que as torres, mas as torres ainda têm sua legitimidade e forte vitalidade.

Com a capacidade de agrupar dados de energia de computação em empresas de tecnologia, a IA desencadeou uma imaginação sem precedentes, levando as principais gigantes de tecnologia a fazerem grandes apostas, introduzindo várias iterações de diferentes chatbots para ‘modelos grandes subjacentes’ como GPT-4, GP4-4o. Um robô de programação automática (Devin) e Sora, com habilidades preliminares para simular o mundo físico real, surgiram um após o outro, ampliando infinitamente a imaginação da IA.

Ao mesmo tempo, a IA é essencialmente uma indústria de grande escala e centralizada, e esta revolução tecnológica vai empurrar as empresas de tecnologia que gradualmente ganharam dominância estrutural na 'era da Internet' para um ponto alto mais estreito. O enorme poder, o fluxo de caixa monopolista e os vastos conjuntos de dados necessários para dominar a era inteligente moldam barreiras mais altas para isso.

À medida que a torre cresce em altura e os decisores por trás das cortinas diminuem, a centralização da IA traz muitos perigos ocultos. Como podem as massas reunidas na praça evitar as sombras sob a torre? Esta é a questão que a Web3 espera abordar.

Essencialmente, as propriedades inerentes da blockchain melhoram os sistemas de inteligência artificial e trazem novas possibilidades, principalmente:

- Na era da inteligência artificial, 'o código é lei' - alcançar regras de execução automática do sistema transparente através de contratos inteligentes e verificação de criptografia, entregando recompensas ao público mais próximo do alvo.

- Economia de tokens - criar e coordenar o comportamento dos participantes através do mecanismo de tokens, staking, redução, recompensas de tokens e penalidades.

- Governança descentralizada - leva-nos a questionar as fontes de informação e encoraja uma abordagem mais crítica e perspicaz à tecnologia de inteligência artificial, prevenindo viés, desinformação e manipulação, nutrindo, em última análise, uma sociedade mais informada e capacitada.

O desenvolvimento da IA também trouxe nova vitalidade ao Web3, talvez o impacto do Web3 na IA precise de tempo para provar, mas o impacto da IA no Web3 é imediato: quer seja a loucura do Meme ou o Agente de IA que ajuda a baixar a barreira de entrada para aplicações on-chain, tudo é evidente.

Quando a Web3 é definida como autoindulgência por um pequeno grupo de pessoas, bem como estar presa a dúvidas sobre a replicação de indústrias tradicionais, a adição de IA traz um futuro previsível: uma base de usuários mais estável e escalável da Web2, modelos de negócios mais inovadores e serviços.

Vivemos num mundo onde 'torres e praças' coexistem, embora a IA e a Web3 tenham linhas temporais e pontos de partida diferentes, o seu objetivo final é como fazer com que as máquinas sirvam melhor a humanidade, e ninguém pode definir um rio apressado. Estamos ansiosos para ver o futuro da IA+Web3.

Declaração:

- Este artigo é reproduzido a partir de [GateTechFlow],os direitos de autor pertencem ao autor original [Coinspire],如对转载有异议,请联系 Equipe Gate Learn, a equipa irá processá-lo o mais breve possível de acordo com os procedimentos relevantes.

- Aviso legal: As opiniões expressas neste artigo são exclusivamente do autor e não constituem qualquer conselho de investimento.

- O artigo é traduzido para outras línguas pela equipe Gate Learn, se não mencionadoGate.ioEm nenhuma circunstância os artigos traduzidos podem ser copiados, distribuídos ou plagiados.

Artigos relacionados

Utilização de Bitcoin (BTC) em El Salvador - Análise do Estado Atual

O que é o Gate Pay?

O que é o BNB?

O que é o USDC?

O que é Coti? Tudo o que precisa saber sobre a COTI